WikiChat: Stopping the Hallucination of Large Language Model Chatbots by Few-Shot Grounding on Wikipedia#

Motivation#

I found this paper in the excellent BAIR blog post claiming the raise of AI systems. Because, we had some interesting discussions on LLM lack of factuality, I wanted to read it a bit carefully. Better understanding how people are trying to make LLM more factual is also interesting in the context of automatic literature review –a topic that I will explore in the next months.

Review#

The paper is an interesting dive into AI system, covering a wide range of practical considerations: factuality, inference efficiency, prompts chaining, information retrieval, example simulations and label collections.

The work is anchored in the literature of retrieve-then-generate. It states that Bing Chat (ChatGPT4 based) is grounded only in 58.7% of its answers. Apart from factuality, the authors also focus on latency –being able to answer quickly– and conversationality of the AI system to build good user experiences with the system.

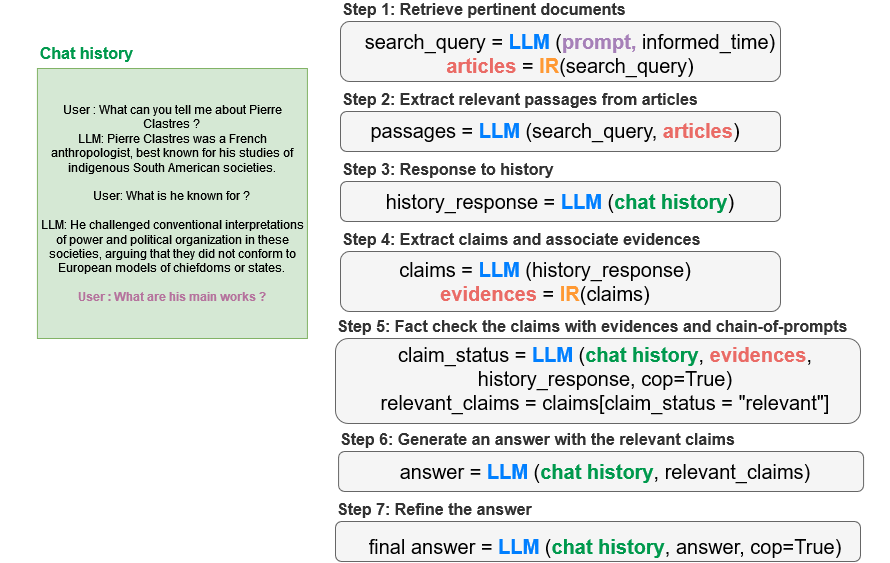

The paper breaks down a 7-step engineering system that retrieve evidences from a reference corpus (here wikipedia, but it could be replicated with other corpora) and compare them to the claims of the LLM. The authors state that whatever the underlying LLM (GPT-4, GPT3.5 or LLaMA), their system outperform the raw model for factuality, respectively by 31.2%, 27.8% or 50.2%.

The main factuality evaluation is done by human crowdsourced workers from the scale annotation platform. They feed GPT-4 simulated user/model examples to evaluators with wikipedia articles. GPT-4 extracts claims from these examples which are fact checked (triple annotation) with respect to the evidence provided in the wikipedia articles. They categorized results into three categories based on the number of views of wikipedia articles, thus reflecting the importance of each topic in the LLM training data.

The authors also conducted a real user study with 40 participants (selected with the prolific platform) allocated either with GPT4 or WikiChat_G4 to discuss any topic of their choice for five turns. On factuality, WikiChat_G4 outperfoms largely GPT-4 from 97.9% to 42.9%. The main complaint was latency.$

To reduce latency, they distill the results of wikichat_G4 into a smaller wikichat_L model by finetuning this later on a dataset of 37,499 (instructions, user, answer) pairs.

Summary of the steps#

The full process is quite complex so I tried to summarize it with one image and some pseudocode. For each of this step a dedicated prompt with few shot examples is given to the LLM (refer to the appendices of the paper for details). The IR system used are ColBERT and PLAID –not sure how these work.

Discussion#

I have the impression than a lot of effort is put in the prompts, rather than in the steps themselves which do not seem very structured. I wonder how much overfitting on the wikipedia corpus has been conducted during the design of the prompt. Put it differently, is this system robust if I change the corpus ? There is no finetuning step, so a transfer should be quite straightforward.

I found the details on the distillation step also informative.